This is the motivation for developing an algorithm to automatically identify the key points of a face and construct the appropriate set of lines from these points. Once we have these lines, the morph can be computed easily using the distort and dissolve method described in Beier and Neely's algorithm, which we will also outline below.

The complete process of going from two distinct images to a single morphed image automatically includes three main steps. The first and most important to this paper is using generalized methods in color theory to find key points in the faces. From these points, we then construct the corresponding set of lines with which we can distort the two images so the important features in each match up with each other. Finally, we can dissolve the two images together by taking a weighted average of each pixel in the distorted images.

The general term "morphing" is used to denote the distorting and dissolving steps. In some senses, morphing implies a progression from one image to another, as in a video clip beginning with one image and ending with the other for example. In this paper and the algorithm we outline, however, we will only produce a single image when we morph two images together. We allow this simplification since it is sufficiently easy to produce any desired animation from a series of single images, all of which can be produced by the algorithm.

We will now introduce our method for facial feature identification using color theory and facial geometry.

Facial Feature Identification

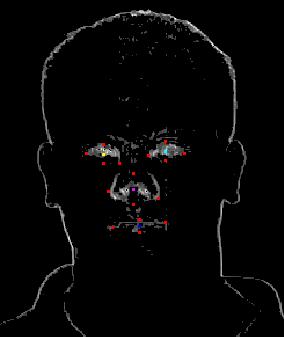

In order to find the important features within the face, we first construct a new image which is easier to work with. The new image is black and white, with the shade of each image equal to the difference in intensity between the corresponding pixel in the original image and all of its surrounding pixels, where we define the shade of a pixel to be its RGB value such that the values for all three colors are equal (this is what makes it black and white) and we define the intensity of a pixel to be the average of its red, green and blue values. We compute the new image, which is the difference in intensity of all surrounding pixels by first constructing an image only considering the difference in intensity of each pixel and the pixel to its left, and then constructing an image considering the difference in intensity of each pixel and the pixel above it, and then constructing a third image whose with pixel intensities equal to the sum of the pixel intensities in the first two images we produced.

|  |

| The color intensity difference image for the sample image. |

Once we have this image, we need to search for sizeable blocks of intensity, which denote significant changes in color in the original image. These blocks correspond to the important facial features, since these features are always characterized either by drastic changes in color, as are the case with the eyes and mouth, or by a perimeter shadow, as is the case with the nose.

We locate the most significant blocks in the image using a process we call "growing". The process entails considering each intensely colored pixel and then looking at the pixels surrounding that pixel. If any of those pixels meet a certain threshold of intensity, we paint those pixels the same color as the current pixel we are considering. We then recursively paint the neighboring pixels' neighbors and so on, until no more pixels can be painted and we have grown our block of pixels to be as large as it can be.

In order to make this process more accurate, we implemented a few enhancements. First, in order to reduce error, we darken all pixels in the image by an amount proportional to their distance from the center of the image. This is done based on the assumption that facial features will fall towards the center of the image. By reducing the intensity of pixels farther from the center, we effectively reduce the chance of growing blocks on erroneous features, like cheeks or even the side of the face. Since these blocks would interfere with the blocks we are actually trying to locate, and since we are not interested in these features for morphing, removing them earlier is a good simplification of the problem. We have also found that darkening pixels more in the horizontal direction than the vertical direction is more effective, since there is greater variance in the vertical distribution of important features than there is in the horizontal distribution.

Another enhancement we implemented was to intensify pixels that are surrounded on all sides by intensely colored pixels, regardless of their intensity. This increases the uniformity of blocks and allows us to see that those pixels do in fact belong in the blocks and that their lack of intensity is just error. The darkness, or lack of intensity, is due to the fact that changes of intensity in the original image often take a few pixels in a direction to fully propgate, and as the change comes to its completion, the gradient of color will become less sharp, and so the corresponding pixel in the new image will lack intensity. It still is, however, part of the change, so including it in the block is not an error.

Once we have identified all of the blocks, we choose which are most likely to match up to each to the major features -- eyes, nose and mouth -- by checking for overall size and shape of the blocks. In general, the larger blocks represent more prominent features, so we will assume that the largest blocks correspond to the important features we're looking for. Sometimes shape can give away that a block is erroneous if, for example, it is taller than it is wide. Since all facial features, including the nose, which is just identified as its tip, are wider than they are tall, it seems relatively safe to throw away excessively tall blocks.

From the blocks we identify as the facial features, we locate the left-most, right-most, top-most and bottom-most points of the block and connect them to form a perimeter around the features. We then pass these lines to the morpher as part of the facial data.

Facial Perimeter Identification

The second aspect of facial recognition involves finding the region of the image that is occupied by the face. We do this by first locating the center of the face by taking the average of its left-most and right-most points (usually its ears). We identify where the background of the image ends and the ears begin by again checking for color intensities in the images. We find the background intensity by looking in the upper corners, and then we scan over from the side of the image until the intensity of the pixel we are on is no longer in the range of the background. At this point, we know we have reached the face,

After we have the center of the face, we cast lines out from this point at different angles, starting from 0 degrees and going all the way to 360 degrees at intervals of n degrees. For each angle, we move along the line out from the center of the face, looking at the intensity of the pixel we are on for each step along the way. When we reach a pixel whose intensity matches the background intensity, we know we have just transitioned from the face to the background, so we can set the pixel as a border pixel of the face. If we repeat this process for each interval of n degrees, we end up with 360 / n border points, which we can connect together to form 360 / n lines, or a complete outline of the face.

In order to increase the accuracy of the outline, we recommend a value of n around 15 degrees. We also experimented with limiting the range at which we cast out lines from the center, and concluded that casting out lines between the angles of -45 degrees and 225 degrees generally do not produce any border points since those lines just follow a path through the neck and into the clothing, and then reach the boundary of the image before every coming into contact with its background. In most experimental data, this process produced about 20 border points, which was seemingly enough to accurately model the shape of the face for morphing.

| --> |  |

| --> |  |

This algorithm works by finding the appropriate pixel in the source image to sample for each pixel in the destination image. It does this by distorting the field around each line in the morphing set so that points around those lines in the original images move towards the corresponding lines from the other image in the new image.

The extent to which this behavior is exhibited depends on three constants that need to be defined for the algorithm. The first, a, is a number greater than zero which represents how rigidly points on the lines in one images will move to points on the line in the other image. The closer to zero that a gets, the more rigid the motion will be. Since our morphing sets contain many lines, we do not necessarily want pixels on any of the lines to move exactly with the lines lest we will produce more of a shattered glass effect than the intended result, so we choose a large number for a. The next constant is b, which controls how much pixels are influenced by lines closer to them as opposed to those farther away. It is traditional to keep b in the range of 0.5 to 2.0, but we wanted to make sure that pixels were most influenced by nearby lines, so we set b = 2.0. The final constant is p, which controls whether longer lines have more influence over the image than shorter ones. Since the length of lines will be arbitrary in our morphing sets since they were constructed to outline facial features uniformly, we do not want longer lines to have any more weight than shorter ones, so we set p = 0.

Using these three constants, the Beier-Neely algorithm will distort the two images so that they become the same shape, and then we can simply go through pixel by pixel and take the average color of each and construct a new image based on these values. Once we have this image, we are done with the morph, and we have effectively gone from two facial images to a morphed combination of the two of them, without any user input.

| (1) |  | + |  | = |  |

| (2) |  | + |  | = |  |

| (3) |  | + |  | = |  |

As can easily be seen, all features were accurately identified in all six of the source images, and they all seem to be mapped and combined with relatively little facial error. Note that the shoulders and shirt do not necessarily match up, but we do not mind this because we did not try to match them up in the first place.

Several examples did trip up the algorithm though. Images of people with glasses often had unrecognizable eyes, and dark-skinned people sometimes noses and mouths that were difficult to pick out. All in all, the algorithm identified all four facial features correctly on about two thirds of all trials, and it accurately found a facial border for ever trial. The problem is that whenever it failed to recognize a feature, it not only does not find the right feature, but it also mislabels some other feature as that features. There have been images for which the left eyebrow is identified as the left eye, which left the left eye to be the nose and then the nose to be the mouth. This set the morpher up for failure since it was fed incorrect map points, and never produced good results.

All in all, this algorithm shows promise for being developed into something that does automatic facial morphing with greater accuracy in the future. Although we have already developed many enhancements to optimze the algorithm, there is still much more that can be done, and much more to be tweaked in our code, so there is definitely room to make this even better than it is now. In the current state, this seems to be a good algorithm for use, and our implementation seems to be relatively effective given the amount of time we had to work on it.

./ffr image1.ppm image2.ppm

./morph image1.ppm image2.ppm outfile.ppm [weight]

Further, the output from ffr is to stdout, and the input to morph is through stdin, so they work best when the output from ffr is piped directly into morph.

The code has been compiled under both Windows and Linux, but it is only stable under Linux at this time. The executables we provide have been compiled under Linux. We have not provided a makefile, so to compile, use:

gcc -o [ffr/morph] -lm ppmio.c [ffr/morph].c